Published Date:

Dec 10, 2024

Exclusive

Mental Health

Addiction

Drugs

What I learned about success and failing-forward using AI with healthcare

How We Made AI Make Mental Health Clinicians Smarter

Say what you will about artificial intelligence, but after building a team to explore how AI can make behavioral health smarter, I can finally say we've met our original goal: making AI helpful to clinicians in an ethical way. More specifically, we've identified nine ways AI can enhance their practice.

In my view, using AI to enable deeper cognitive insights into their patients was risky—not technically, but as an investment.

Modern behavioral health is quite young in its use of technology. Electronic Health Records (EHRs) are fine for billing, but using AI for clinical insight is another level of sophistication. Would practitioners see this as a legitimate way forward, challenging the ingrained "do this for a fee" mindset just to get paid? We would have to wait and see.

Our 10,000-Hour Rule. Did it work?

There's a business theory popularized by Malcolm Gladwell in his book “Outliers: The Story of Success”, known as the 10,000-hour rule. It suggests that to become an expert in a skill, you need to practice it for at least 10,000 hours. With enough practice, anyone can achieve professional-level proficiency. I found that the same is true with AI development. Sort of.

Can Gladwell’s theory be applied here? Not exactly; it took five times Gladwell's rule!

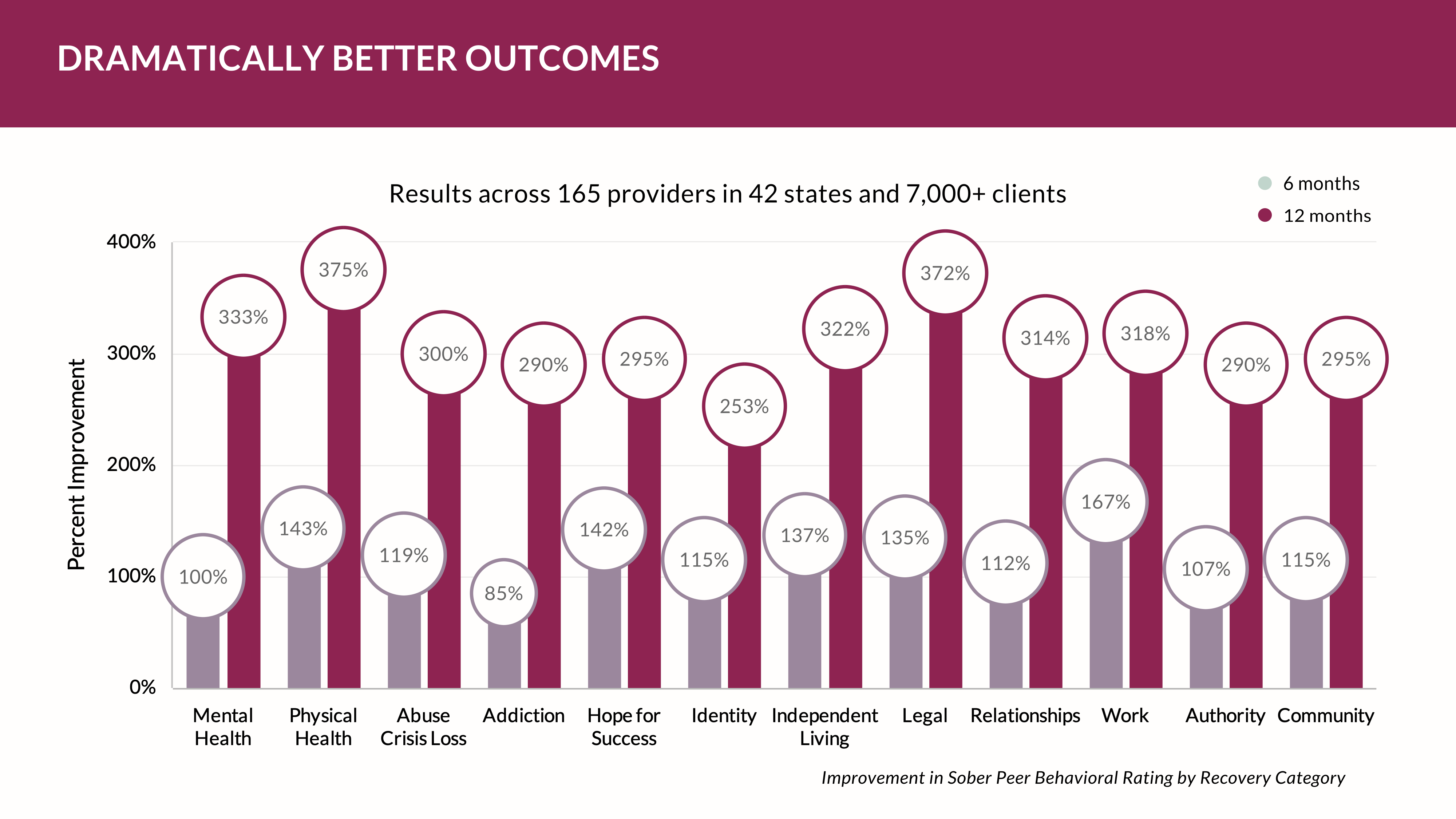

I didn't fully realize that making AI smart would dwarf Gladwell's hypothesis. So, we trained at institutions like MIT and Harvard and even collaborated with OpenAI as an early developer. Five years of testing AI with behavioral health clients, technicians, and practices was a significant challenge. Over time, we collaborated with 2,500 clinicians over 7 million days of patient observation to show us how AI could make them smarter in coaching patients toward better long-term outcomes.

What we've learned though has reshaped the way we invest in healthcare software platforms and how we handle data and its use.

Realizing the Need for Change

In the "do more with less" world of behavioral health, there's a desperate need for tools that enhance clinicians' capabilities without adding to their workload. The industry faces increasing demands with limited resources, and it's only going to get worse.

A while back, I noticed that clinicians were overwhelmed with growing caseloads and felt they weren't delving deep enough into each patient's issues. To help them provide the best care, I realized we needed to develop a tool that could augment their abilities without adding to their burden. That's when we started exploring AI.

Crafting Effective AI Prompts

However, we quickly learned that how clinicians use AI is crucial. To truly benefit, they need two things: first, to provide AI with their most insightful questions based on their clinical training, and second, to gather extensive, case-specific data from their patients—not just generic information from the internet.

We quickly learned where the equity in behavioral health AI would lie. And, there were no shortcuts there.

We encouraged clinicians to leverage their expertise to formulate insightful questions for the AI. Instead of asking broad questions, they focused on specifics tailored to each patient. For instance, with a patient dealing with chronic stress, a clinician might ask the AI, "Considering the patient's history of trauma and current lifestyle factors, what cognitive-behavioral patterns might be perpetuating their stress?"

This approach made a significant difference. The AI offered nuanced perspectives that aligned with clinical observations but also introduced new angles the clinicians hadn't considered. It was like giving them a knowledgeable colleague to consult with, enhancing their understanding and treatment planning.

Rethinking the platform. How to Collect Patient-Centric Data

The next step meant gathering detailed, patient-specific data. That needed a reverse view of the way EHRs work. Instead of clinicians typing in data we need patients to provide it. That was exciting. We developed tools to help clinicians collect comprehensive information through questionnaires and encouraged open-ended discussions during sessions. Patients were asked to keep journals about their daily experiences, triggers, and coping mechanisms.

We had to collect about 1 million patient experiences per quarter before AI started to be very meaningful. We were shocked at the outcome.

Many patients regularly share detailed entries digitally about clinical attributes like anxiety episodes. The requires very little clinical documentation. By adding this data into the AI prompt libraries, the clinicians are able to identify patterns linking patient anxiety to specific social situations (12 proved to be exceptional barometers of positive outcomes). The AI helps reveal correlations that aren't immediately apparent, allowing for a more effective, tailored treatment plan.

Overcoming Skepticism and Embracing Change

At first, some clinicians were skeptical about using AI. They questioned whether it could truly enhance clinical judgment. But as they saw the improvements in their patients—like Sarah's reduced anxiety and increased confidence—they became more curious.

We explained that the key wasn't to rely on AI blindly but to use it as a tool to augment their clinical skills. By asking the right questions and collecting thorough patient data, AI can provide insights that might take much longer to uncover on their own.

The Impact on the Industry

Integrating AI into clinical practice has been a game-changer. Clinicians are reporting remarkable improvements in their patients and feel more equipped to handle complex cases. They've become more efficient without sacrificing the quality of care – and they’re consistently increasing the number of monthly patients enrolled.

Moreover, their reputation has grown. Other professionals seek their input on challenging cases, and patients refer friends and family, noting the depth of understanding they experience in sessions.

Conclusion

I understand the hesitation some might have about embracing AI in mental health practice. But from my experience as an investor and developer of software platforms, if clinicians master how to ask insightful questions—skills they already have—and efficiently collect their patients' responses, AI will make them a lot smarter.

So, be wary of the AI naysayers. Embracing AI as a powerful ally can enhance practice and improve patient outcomes.

Clinicians might just find themselves becoming the smartest in their field, with patients who benefit greatly from their enhanced insights.

In my journey, I've learned that success with AI involves a lot of trial and error—what some might call "failing forward." But every challenge overcome and every lesson learned has made a significant impact. AI isn't here to replace clinicians; it's here to empower them. And in an industry that's only getting more demanding, that's something we desperately need.

Would we do it again? Sure we would. Will AI help change behavioral health methods and practices?

We’ll be watching.

Other Blogs

The Plan No One Sees Coming—But Soon Will

Exclusive

Mental Health

Addiction

Drugs

Ant Pheromone Study May Improve Mental Health Outcomes

Exclusive

Mental Health

Addiction

Drugs

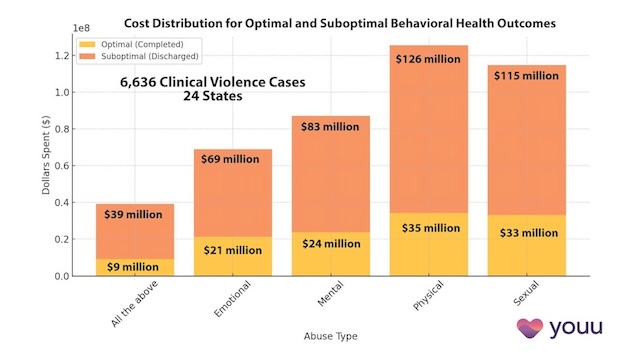

Interrupting Violence Should Be Irresistibly Investable

Exclusive

Mental Health

Addiction

Drugs

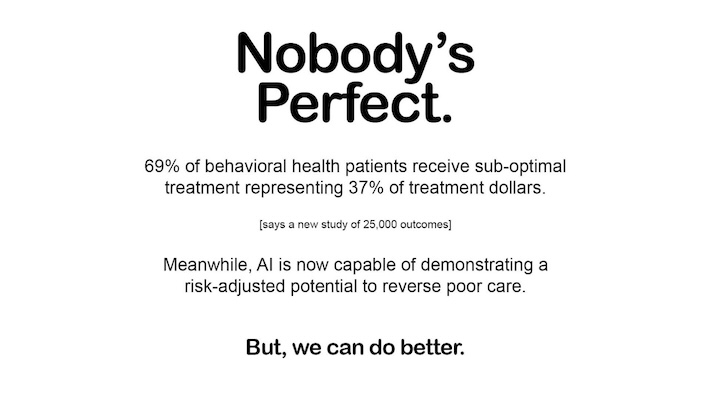

69% of Behavioral Health Patients Receive Sub-Optimal Care According to a New Analysis

Exclusive

Mental Health

Addiction

Drugs

Other Blogs

Have Questions? Lets Meet

Select a time you like to meet with us