Published Date:

Jun 1, 2023

AI

Behavioral Health

GPT

We spent 5 million hours engineering AI to work with Behavioral Health: Here’s what we learned.

The article describes the process of engineering artificial intelligence (AI) to work with behavioral health, and the insights gained from this effort. The authors share the importance of using a human-centered approach in developing AI tools for mental health care, as well as the potential for these tools to improve access to care, reduce stigma, and enhance treatment outcomes.

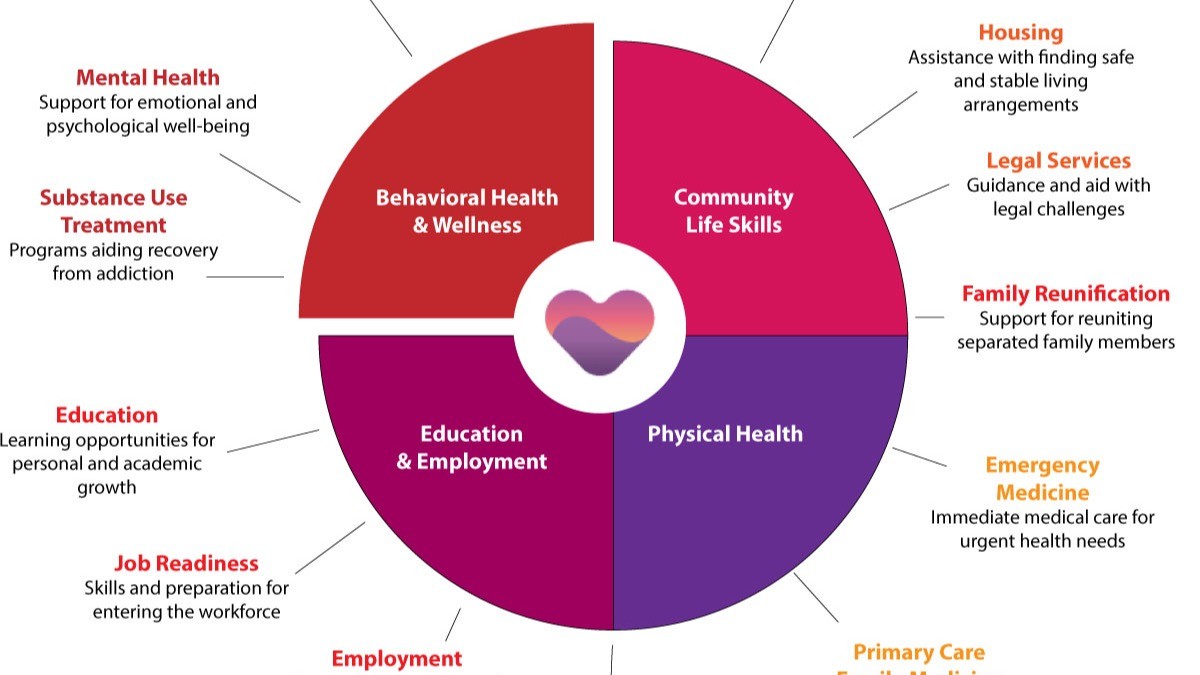

The use of Augmented Intelligence (AI) is one of the most promising technological achievements for chronic behavioral healthcare. The problem is big. It’s complex and vastly under-resourced. Behavioral health, both chronic substance use and mental health, contributes to a whopping 60% of the total cost of health care. Solving this problem is going to need big, novel ideas to solve it.

As a philanthropist and private equity investor, I’ve put AI into practice for over 20 years serving millions of consumers. I studied it at MIT and Harvard. Through my associations there, I’ve had some eye-opening epiphanies and made my share of mistakes. In my case, it wasn’t until 2021, when we were among a very small number of companies to workbench the earliest version of GPT, or “generative pre-trained transformer”, that my team took a deep interest in behavioral health. Why we did it is a long story. But, it is now clear behavioral health is one of the most interesting uses of this technology. Today, the world has been introduced to its simpler consumer version, GPTChat, and the world will have the chance to taste its power.

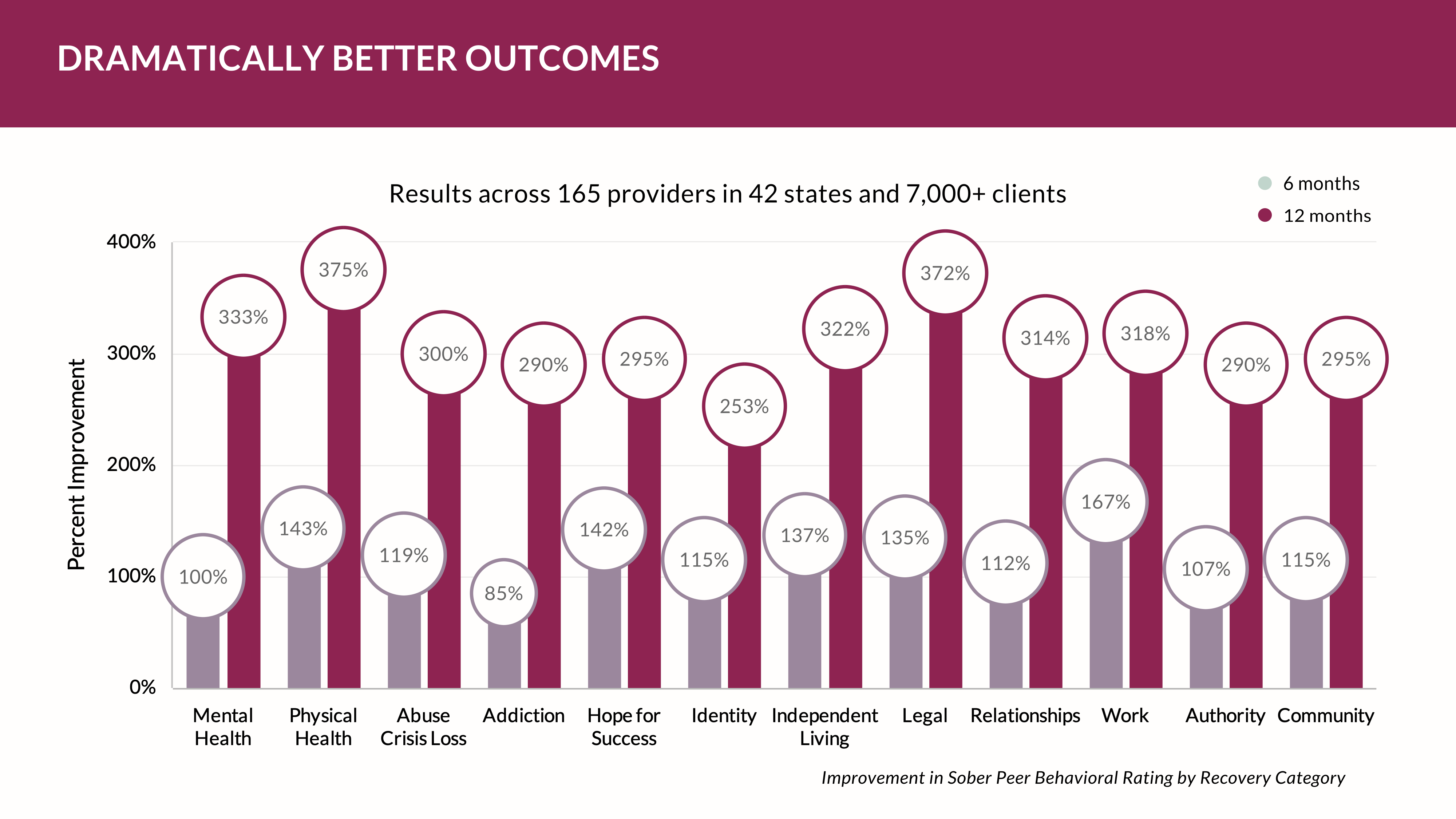

Before you rush out and declare GPT, or its competitive language models, are something that’s going to be in every electronic healthcare system, it’s important to understand that for healthcare, augmented intelligence still relies on power-developers and engineers. It’s a heavy lift to keep it relevant and ethical to a clinical setting. It takes a ton of resources to train it to work like we do as humans in our health practices. It’s also important to realize it will not replace a human clinician per se – but it will make them smarter while virtually eliminating the mundane that causes burn-out among a short-handed, under-skilled workforce. In our research, our models can pass complex clinical certification licensure tests at, or above, the competency of the most well-trained professional. That’s a accomplishment of a higher order.

So, how did we do it?

Our process of discovery looked like this; we started with a few engineers and a UI/UX design team. In an instant that became about 2,500 people around the globe in what we call the “YOUUniverse” tasked to train a private dataset. The result made our AI relevant and ethical for a healthcare service – within our application audience. Do the math and that’s an annual effort of five million hours training our model to be smart. Shockingly smart.

While there are countless important integrations of AI into health community uses, here are nine we see as among the most important:

Diagnosis and treatment planning: Augmented intelligence can assist mental health professionals in diagnosing and treating patients, as well as to develop personalized treatment plans based on patient data and characteristics.

Predictive analytics: Augmented intelligence can be used to analyze patient data and identify patterns or trends that may be relevant to treatment. This can help mental health professionals identify and intervene with patients who may be at risk of worsening symptoms or relapses.

Symptom tracking: Augmented intelligence can be used to assist patients in tracking and managing their symptoms, as well as to provide alerts and recommendations for care.

Virtual therapy: Augmented intelligence can be used to deliver virtual therapy sessions and other mental health support, allowing patients to access care remotely or when in-person therapy is not possible.

Medication management: Augmented intelligence can be used to assist patients in managing their medication regimens, including reminders and alerts for refills and dosage changes.

Substance use monitoring: Augmented intelligence can be used to assist individuals in recovery from substance use disorders in tracking and managing their progress, as well as to provide alerts and recommendations for care.

Social support: Augmented intelligence can be used to connect individuals with mental health and substance use disorders with social support networks and peer groups, helping them feel less isolated and more supported.

Care coordination: Augmented intelligence can be used to assist mental health and substance use providers in coordinating care and communication with other providers, such as primary care doctors or specialists.

Research and development: Augmented intelligence can be used to analyze and interpret data from mental health and substance use research, as well as to assist in the development of new treatments and interventions.

Overall, these are just a few of the many important integrations for augmented intelligence in the fields of mental health and substance use. As this technology continues to develop and mature, it is likely to have a transformative impact on the delivery of care and support for individuals with mental health and substance use disorders.

Other Blogs

The Plan No One Sees Coming—But Soon Will

Exclusive

Mental Health

Addiction

Drugs

Ant Pheromone Study May Improve Mental Health Outcomes

Exclusive

Mental Health

Addiction

Drugs

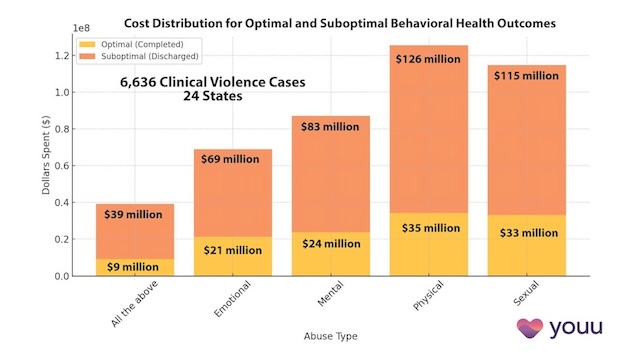

Interrupting Violence Should Be Irresistibly Investable

Exclusive

Mental Health

Addiction

Drugs

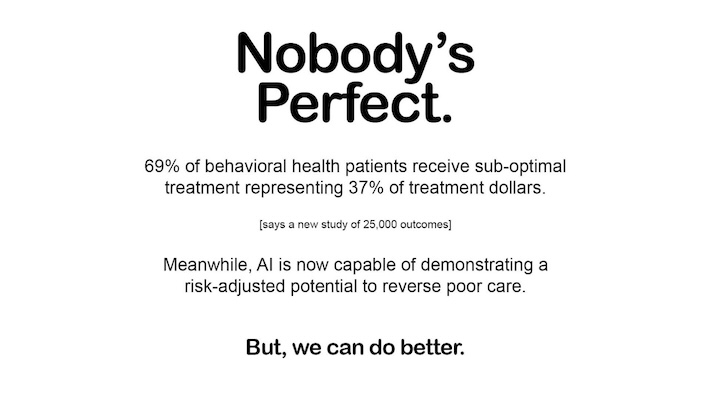

69% of Behavioral Health Patients Receive Sub-Optimal Care According to a New Analysis

Exclusive

Mental Health

Addiction

Drugs

Other Blogs

Have Questions? Lets Meet

Select a time you like to meet with us